In this Article, we are going to learn about How To Do Autoscaling in Kubernetes? and you will get all the details and graph images as well.

How To Do Autoscaling in Kubernetes?

Purpose

Kubernetes at its fundamental is a resources management and orchestration tool. We have dedicated our time so far on operations to explore and play around with its features to deploy, monitor and control the application pods.

However, we need to emphasize questions such as:

- How are we going to scale pods and applications?

- How can we keep containers running in a healthy state and running proficiently?

- With the on-going changes in journeys & workloads, how can we keep up with such variations?

- How can we harness the benefits of autoscaling to optimize the infrastructure costs?

Allowing kubernetes clusters to balance resources and performance can be perplexing and requires specialist knowledge of the inner mechanisms of Kubernetes. Just because your app or services workload isn’t constant, it rather fluctuates throughout the day if not the hour. Think of it as a journey and continuing process.

Business Benefits of Autoscaling in Kubernetes

- No Upfront Capital Expenditures

- Availability: Auto-Scaling keeps track of the demands of your application and makes sure that the application has the correct amount of capacity to answer to the traffic needs.

- Don’tGuessonCapacity–EasilyScaleUporDown: handle peak loads and traffic or be set up to support average daily usage(not to struggle without ages during peak times)

- Improved Agility and Speed-Up Time to Production:

AutoScaling in Kubernetes is just one of the ways that we help save the money while giving the clients the flexibility to scale their applications to meet the demands of their customers

Implementation

We have installed Metrics Server across all the clusters to fetch the metrics – Few highlights:

- Metrics Server is a cluster-wide aggregator of resource usage data

- The metric server collects metrics from the Summary API, exposed by Kubelet on each node.

- Metrics Server enables HPA to scale the number of pod replicas using metrics like CPU and memory as the triggers to scale more or less.

- Since Read-only port disabled as per CIS Benchmarks, We have enabled Webhook Token authentication on kubelets to enable metrics-server talk to kubelets using the service account

- These metrics can be either accessed directly by the user, for example by using kubectl top command or used by a controller in the cluster, e.g. Horizontal Pod Autoscaler, to make decisions.

Cluster Autoscaler (CA) scales your cluster nodes based on pending pods. It regularly checks for any pending pods and increases the size of the cluster if more resources are needed and if the scaled-up cluster is still within the user-provided restrictions.

We have deployed cluster-auto scaler chart on all the Clusters and added CloudLabels on kops instance groups which will eventually become tags on respective autoscaling groups

spec:

cloudLabels:

k8s.io/cluster-autoscaler/enabled: “true”

kubernetes.io/cluster/<name-of-cluster>: owned

Kubernetes Autoscaling Building Blocks

autoscaling in Kubernetes requires synchronization between two layers of scalability:

- Pod level auto scalers, this includes Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler (VPA); both scale available resources for your containers, and

- cluster level scalability, which managed by the Cluster Autoscaler (CA); it scales up or down the number of nodes inside your cluster.

Horizontal Pod Autoscaler (HPA)

As the name denotes, HPA scales the number of pod replicas. Most DevOps use CPU and memory as the triggers to scale more pod replicas or less. However, you can configure it to scale your pods based on custom metrics, multiple metrics, or even external metrics.

We will focus on CPU and Memory for now to configure HPA but for custom metrics, this is the custom. metrics. k8s. io API. It’s provided by “adapter” API servers provided by metrics solution vendors.

Check with your metrics pipeline or the list of known solutions. If you would like to write your own, check out the boilerplate to get started.

The Horizontal Pod Autoscaler automatically scales the number of pods in are plication controller, deployment or replica set based on observed CPU utilization (or, with custom metrics support, on some other application-provided metrics).

Note that Horizontal Pod Autoscaling does not apply to objects that can’t be scaled, for example, Daemon Sets.

The Horizontal Pod Autoscaler is implemented as a Kubernetes API resource and a controller. The resource determines the behavior of the controller. The controller periodically adjusts the number of replicas in a replication controller or deployment to match the observed average CPU utilization to the target specified by the user.

Horizontal Pod Autoscaler, like every API resource, is supported in a standard way by kubectl. We can create a new auto-scaler using kubectl create command. We can list auto scalers by kubectl get Hpa and get a detailed description by kubectl to describe Hpa. Finally, we can delete an auto-scaler using kubectl delete Hpa.

In addition, there is a special kubectl autoscale command for easy creation of a Horizontal Pod Autoscaler. For instance, executing kubectl autoscale rs foo –min=2 –max=5 –CPU-percent=80 will create an auto-scaler for replication set foo, with target CPU utilization set to 80% and the number of replicas between 2 and 5.

The example below depicts the definition of an HPA resource:

apiVersion: autoscaling/v2beta2kind: Horizontal PodAutoscaler metadata: name: PHP-apache namespace: default-spec: scale Target Ref: API Version: apps/v1 kind: Deployment name: PHP-apache min Replicas:

1 maxReplicas: 10 metrics: – type: Resource resource: name: CPU target: type: Utilization average utilization: 50

Notice that the target CPU Utilization Percentage field has been replaced with an array called metrics. The CPU utilization metric is a resource metric since it is represented as a percentage of a resource specified on pod containers.

Notice that you can specify other resource metrics besides CPU. By default, the only other supported resource metric is The memory.

These resources do not change names from cluster to cluster, and should always be available, as long as the metrics.k8s.io API is available.

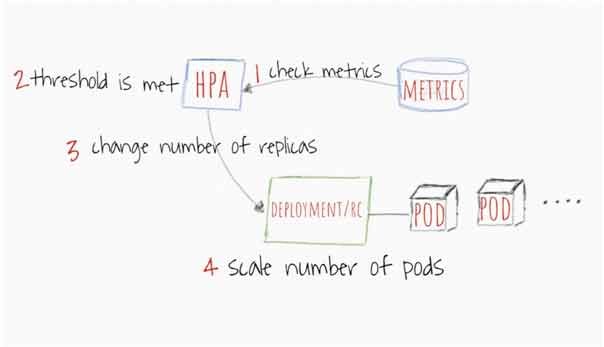

High-level HPA workflow

- HPA constantly checks metrics values you configure during setup at default 30 seconds interval.

- HPA attempts to increase the number of pods If the specified threshold is met.

- HPA principally updates the number of replicas inside the deployment or replication controller

- The Deployment/Replication Controller would then roll-out any additionally needed pods.

HPA Rollout Considerations

- This can be configured through the–horizontal-pod- auto scaler-sync-period flag of the controller manager

- Default HPA relative metrics tolerance is 10%

- HPA waits for 3minutes after the last scale – prevents to allow metrics to stabilize. This can also be configured through–horizontal-pod-auto scaler-upscale-delay flag

- HPA waits for 5 minutes from the last scale – down event to avoid auto-scaler thrashing. Configurable through –horizontal-pod-auto scaler-downscale-delay flag

- HPA works best with deployment objects as opposed to replication controllers. Does not work with a rolling update using direct manipulation of replication controllers. It depends on the deployment object to manage the size of underlying replica sets when you do a deployment.

Vertical Pods Autoscaler (VPA)

Vertical Pods Autoscaler (VPA) allows more (or less) CPU or memory to present pods. Think of it as giving pods some growth hormones It can work for both stateful and stateless pods but it is built mainly for stateful services.

However, you can use it for stateless pods as well if you would like to implement an auto-correction of resources you primarily allotted for your pods.

VPA can also react to OOM (out of memory) events. VPA requires currently for the pods to be restarted to change allocated CPU and memory. When VPA restarts pods it respects pods distribution budget (PDB) to make sure there is always the minimum required a number of pods.

You can set the min and max of resources that the VPA can allocate to any of your pods. For example, you can limit the maximum memory limit to be no more than 8GB.

This is useful in particular when you know that your current nodes cannot allocate more than 8GB per container. Read the VPA’s official wiki page for detailed spec and design.

VPA has also an interesting feature called the VPA Recommender. It watches the historic resources usage and OOM events of all pods to suggest new values of the “request” resources spec.

The Recommender generally uses some smart algorithm to calculate memory and CPU values based on historic metrics. It also provides an API that takes the pod descriptor and provides suggested resources requests.

High-level VPA workflow

- VPA unceasingly checks metrics values you configured during setup at a DEFAULT 10 seconds intervals

- VPA attempts to change the allocated memory and/or CPU If the threshold is met.

- VPA mainly updates the resources inside the deployment or replication controller specs

- When pods are restarted the new resources are applied to the created instances.

VPA Rollout Considerations

- Changes in resources are not yet probable without restarting the pod. Main rational so far, is that such a change may cause a lot of unpredictability. Hence, the thinking to restart the pods and let it be scheduled based on the newly allocated resources.

- VPA and HPA are not yet compatible with each other and cannot work on the same pods. Make sure you separate their scope in your setup if you are using them both inside the same cluster.

- VPA adjusts only the resources requests of containers based on past and current resource usage observed. It doesn’t set resources limits. This can be problematic with misbehaving applications which start using more and more resources leading to pods being killed by Kubernetes.

Cluster Autoscaler

Cluster Autoscaler (CA) scales your cluster nodes based on pending pods. It regularly checks whether there are any pending pods and increases the size of the cluster if more resources are needed and if the scaled-up cluster is still within the user-provided restrictions.

CA interfaces with the cloud provider to request more nodes or deallocate idle nodes. It works with GCP, AWS, and Azure.

High-level CA workflow

- The CA checks for pods in a pending state at a default interval of 10 seconds.

- When If there are one or more pods in a pending state because of there are not enough available resources on the cluster to allocate on the cluster them, then it attempts to provision one or more additional nodes.

- When the node is granted by the cloud provider, the node is joined to the cluster and becomes ready to serve pods.

- Kubernetes scheduler allocates the pending pods to the new node. If some pods are still in the pending state, the process is repeated and more nodes are added to the cluster.

CA Rollout Considerations

- Cluster Autoscaler makes sure that all pods in the cluster have a place to run, no matter if there is any CPU load or not. Moreover, it tries to ensure that there are no unneeded nodes in the cluster. (source)

- CA realizes a scalability need in about 30 seconds.

- CA waits for by default to 10 mins by default after a node becomes unneeded before it scales it down.

- CA has the concept of expanders. Expanders provide different strategies for selecting the node group to which new nodes will be added.

- Use“cluster-autoscaler.kubernetes.io/safe-to-evict”: “true” responsibly. If you set many of your pods or enough pods that are on all your nodes, you will lose a lot of flexibility to scale down.

- Use PodDisruptionBudgets to prevent pods from being deleted and end up part of your application fully non-functional.

How Kubernetes Autoscalers Interact Together

If you would like to reach nirvana autoscaling your Kubernetes cluster, you will need to use pod layer auto-scalers with the CA.

References:

Horizontal Pod Autoscaler – Kubernetes

Horizontal Pod Autoscaler Walkthrough – Kubernetes

Author – Sumit Jaiswal

If you have any question about Autoscaling in Kubernetes so feel free to ask and comments below

Thank You 🙂

Read More: iSeePassword Windows Password Recovery Pro

Read More: How to Speed up the smartphone

Thanks for the post, very helpful.

Thank You 🙂

Very useful post. Please also post on What is Kubernetes?

Thank You 🙂